How to call OpenAI and Gemini with a Unified ProxAI API

TL;DR: Stop juggling multiple AI APIs. This post shows you how to use ProxAI to call any model from any provider with a single line of code, saving you time and future-proofing your app.

Introduction

Most developers are sticking to one AI provider and using their models for all their needs. The current major providers are OpenAI , Google Gemini , and Anthropic Claude . There are other alternatives like Grok , DeepSeek , Databricks but switching to alternatives requires too much effort. Extensive documentation reading and code changes are required to integrate or switch between providers.

Thanks to new AI agents (Claude Code , Cursor , Gemini CLI …), the integration process is much easier but it also depends on how big the project is. When the project gets bigger, these AI agents are failing to cooperate and still require lots of mental work to review changes and ensure nothing is broken. This process creates unnecessary loyalty to one AI provider in the long run. Better models are always out there, waiting for adaptation.

To solve this problem, engineers are adding AI connection layers to their techstack similar to database layers. They are abstracting out the AI calls to that layer and trying to implement the rest of the code without the provider specific requirements. This is a good idea for dealing with this issue but it also requires maintenance. The AI space is evolving fast, and keeping up with it consumes engineering cycles.

What is the real problem?

Before starting examples, I want to list the real world problem briefly to give a quick idea why things are frustrating right now and what causes the engineering burden.

- Better or cheaper models are out there but it takes time to adapt your framework.

- If the AI connection layer isn’t implemented properly, it gets harder to refactor the code base for new APIs and AI providers.

- Even if it is implemented properly, it requires constant maintenance.

- Writing your own ad-hoc layer instead of having battle tested abstraction layer.

- Staying up to date with all the different options is also another burden.

Solving with ProxAI

There is a simple solution for this problem; it is the open-source ProxAI library. This library enables developers to make connections with 10+ AI providers with 100+ models without changing any code in your code base. The power of ProxAI comes from:

- It is always up-to-date thanks to its community-driven approach.

- It comes with lots of free and useful tools like caching, multiprocessing, monitoring, and more.

In this blog, I want to show you how fast you can make your first query with ProxAI library to any OpenAI, Gemini etc. model. I also want to show some examples of the intuitiveness of the ProxAI’s API design.

The old way of making calls to OpenAI and Gemini

Let’s demonstrate the simplest usage of two different API requests; one for OpenAI, one for Gemini:

from openai import OpenAI

client = OpenAI()

response = client.responses.create(

model="gpt-4.1",

input="Write a one-sentence bedtime story about a unicorn."

)

print(response.output_text)from google import genai

client = genai.Client()

response = client.models.generate_content(

model="gemini-2.5-flash",

contents="Explain how AI works in a few words"

)

print(response.text)This is simple and easy enough but let’s say we want to add message history. What keywords does OpenAI use for message history? What does Gemini use for it? We can check documentation or ask AI chat bots, better ask AI agents to implement for us. But it does not end here, the complexity increases for different models because not all model APIs are supporting the same structure. If we want to use, let’s say, Databricks’s Llama 3 hosting, it diverges more. When multithreading is involved, things get more complicated.

The new way of making calls with ProxAI

Let’s see how ProxAI handles all these complexities:

import proxai as px

response = px.generate_text(

prompt="Explain how AI works in a few words"

)

print(response)Wait, which model did we connect to? ProxAI tries its best effort and uses default models if the model is not set. Let’s see gpt-4.1 and gemini-2.5-flash in action:

import proxai as px

openai_response = px.generate_text(

provider_model=("openai", "gpt-4.1"),

prompt="Explain how AI works in a few words"

)

print(openai_response)

gemini_response = px.generate_text(

provider_model=("gemini", "gemini-2.5-flash"),

prompt="Explain how AI works in a few words"

)

print(gemini_response)That is it. But how does this solve the message history problem we mentioned? Simple, it has one unified message history usage, and it maps it under the hood for different providers:

response = px.generate_text(

provider_model=("gemini", "gemini-2.5-flash"),

messages=[

{'role': 'user', 'content': 'Hello!'},

{'role': 'assistant', 'content': 'Hello there!'}],

prompt="Explain how AI works in a few words"

)

print(response)But what if the model or provider doesn’t support the messages (i.e., some Hugging Face models)? ProxAI tries its best to provide as much support as possible with a few techniques. Very rarely, it is not possible to convert queries, and ProxAI will inform you about that, but most of the time, it just works with ProxAI!

Let’s not stop there and try all available models:

for provider_model in px.models.list_models():

response = px.generate_text(

provider_model=provider_model,

messages=[

{'role': 'user', 'content': 'Hello!'},

{'role': 'assistant', 'content': 'Hello there!'}],

prompt="Explain how AI works in a few words"

)

print(f"{provider_model}: {response}")There is a bonus point! If you add PROXAI_API_KEY to your environment variables it creates a lot of very helpful tracing and metrics on your queries, again, without changing any code.

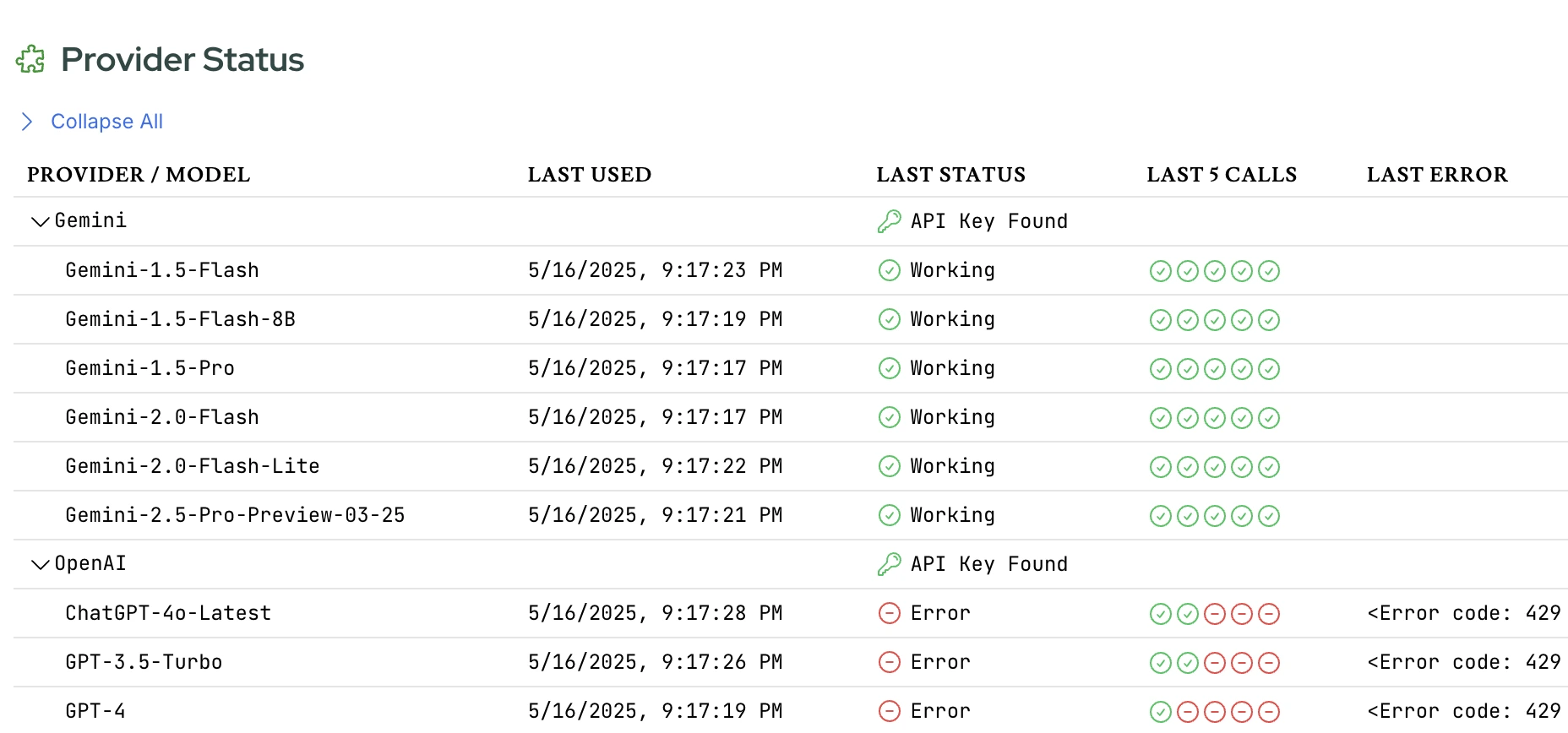

Health Monitoring:

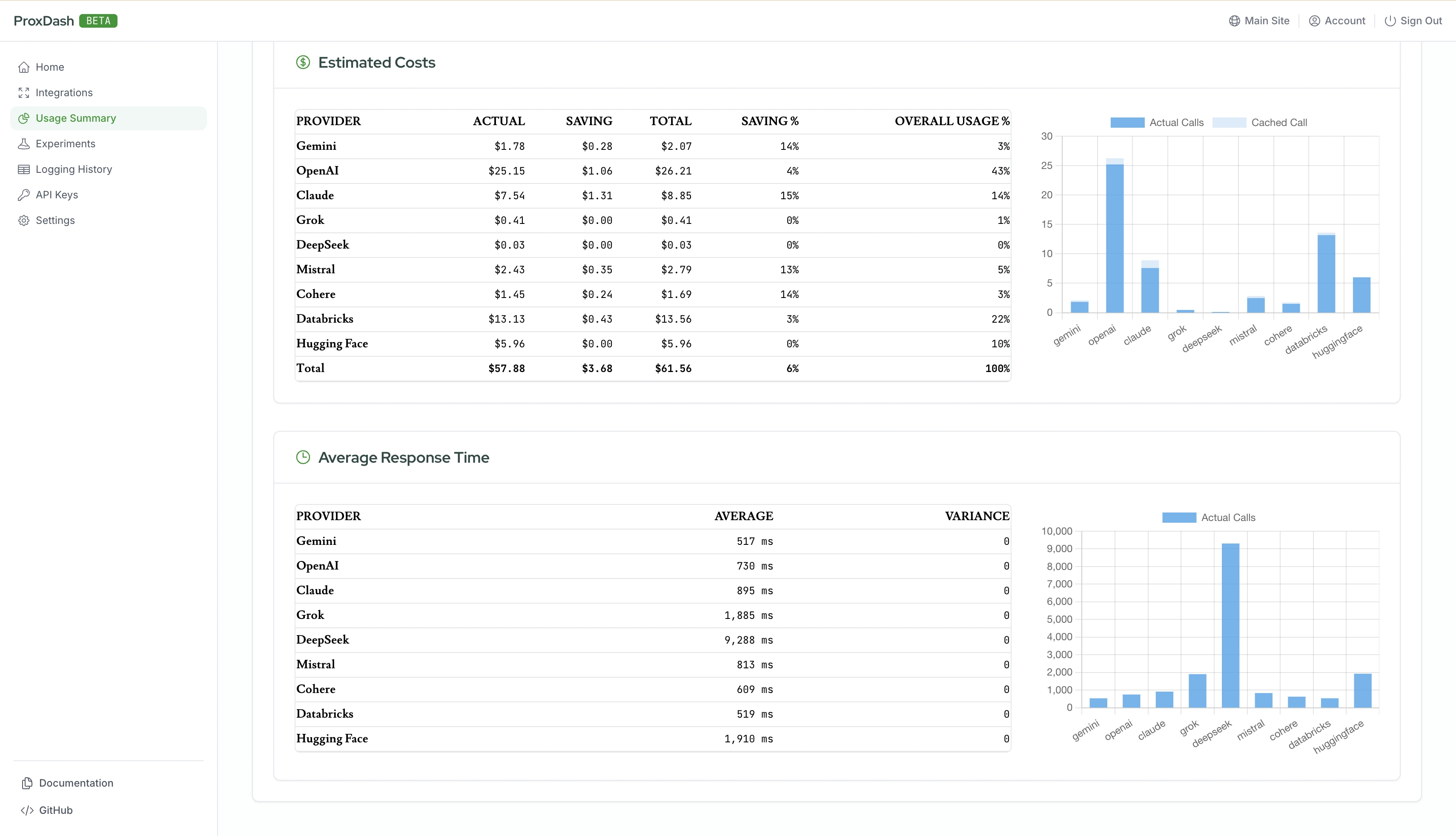

Usage Metrics:

ProxAI constantly ships new features!

Feature of ProxAI: As ProxAI team, we are constantly developing new features for:

- Unifying all kinds of AI calls (text, image, video) via a simple API

- Staying always up-to-date

- Always open-source and free

Check out our roadmap page for our more comprehensive plans. Let ProxAI do the hard work of abstracting out the AI connections and your team (or your AI agent!) can focus on implementing your Business Logic.

ProxAI alternatives: There are other alternatives in the market right now. We have other comparison posts for giving better understanding the pros and cons but the following is the summary of the failing points.

- Most AI unification libraries are unnecessarily complex and force you to write code in a specific way.

- The lack of an intuitive API design also adds unnecessary complications and boilerplate code.

- They don’t come with useful tools for researchers and developers while keeping things simple.

- Tracing, caching, metrics tracking, experimentation, and logging don’t come as built-in. ProxAI seamlessly implements these features if requested.

Frequently Asked Questions (FAQ)

Q: Can I use ProxAI to switch between different versions of the same model, like GPT-4 and GPT-4.1?

A: Absolutely. The provider_model parameter allows you to specify both the

provider and the exact model version, making it easy to test and switch between

models like ("openai", "gpt-4") and ("openai", "gpt-4.1") with no other code

changes. Also, it is possible to change default models globally, check

set-global-model.

Q: Is it difficult to set up authentication for all the different AI providers with ProxAI?

A: No, ProxAI simplifies this. You can set your API keys as environment variables, and ProxAI handles the authentication for each provider under the hood, so you don’t have to manage different authentication clients in your code.

Q: Does using a unified API like ProxAI add significant latency to the AI calls?

A: ProxAI is designed to be lightweight and efficient. It’s negligible most of the time. Plus, features like built-in caching can often lead to a net decrease in latency for repeated queries especially for research use cases.

Conclusion

Navigating the diverse landscape of AI models can be complex, with each provider demanding different APIs and data structures. This often leads to provider lock-in and a high maintenance burden.

ProxAI solves this by offering a single, unified API to access a wide array of models from providers like OpenAI, Gemini, and more. This simplifies development, future-proofs your applications, and allows you to easily switch to the best model for the job. With built-in features like caching, tracing, and monitoring, ProxAI is more than a convenience—it’s a powerful tool for building robust, production-ready AI applications.

Ready to streamline your AI development? Explore our documentation and start building with ProxAI today.