How I Built a Multi-Model AI Chat App via Claude Code and ProxAI

Github Repo: https://github.com/Nexarithm/multi_model_chat

TL;DR: I built a multi-model AI chat app that lets me ask a question and get answers from 10+ different AI models (like GPT-4, Claude, Gemini, Mistral…) then have another AI “combiner” model that summarizes these answers in two ways: most common parts and what is unique to each model. You can check the github repo and use/fork/modify it for yourselves! I felt the technical details and the small journey are worth sharing. I learned some useful insights, feel free to skip all and go to “What I Learned” at the end.

The Backstory: Why I Built an App with 10+ AI Models

I realized I am asking all my questions to Gemini, OpenAI, Claude simultaneously and sometimes if these models are not enough, I am asking DeepSeek and Grok. Most of the time, I am continuing my conversation and follow up questions from the tab that I liked the answer from most. Close to half, it is Gemini but it is very dependent on the task and all models are somewhat good at some tasks. It is hard to make clean classification for the tasks and mapping with the models. There are some more objective parameters like information quality, speed of the response, how long the answer is, or writing style. There are also some more subjective things like the mood I have at the query time, how much time I think I can invest in that task, or how important is the task for me. Instead of trying to investigate why I am doing this, I decided to automate this workflow with these AI chats. It is a very personal workflow but some others can find this automated version useful in their life.

Setting the Project Scope

Let’s start with making some boundaries and clarifying what to achieve.

The User Experience Vision

I can define this project’s goal as “instead of writing the same query to different AI chats, I want to make a query simultaneously and get the ready summary of these answers”. Example queries can be “I have two days in NYC, can you plan my two day trip?”, “Should I learn python or javascript in 2025, after all these AI booms?”, “List all AI chat bots in the market”. Of course there is no limit for queries we can make but these are figurative starting points.

Having a summary/the-most-common-answers is really good but I also didn’t want to lose the information about which model made what unique contribution to this answer. Because of that, I want my answers in two parts. First, the most common answer which is kind of consensus of the models. Second, a summary of which model is suggesting what uniquely in addition to the consensus.

Dev Constraints & Philosophy

I made some technical requirements for this project. This is mostly for my personal development and sharpening my skills and also using my time efficiently to not invest more than I need for this fun project. The requirements changed a lot during the implementation but the following list is the final rough version:

I want a web UI for this experience: There are other ways to make this project. For example python script with shell input/output or python google colab. I can make huge pros and cons for each of the options but the main idea is I want to make the experience as close as the real AI chat bots on the web.

Localhost is good enough: I didn’t want to deal with maintenance of the project and make it bigger than it needs to be because it is for very personal use and hosting on the local machine satisfies all my needs. Also, it might be against some AI provider API policies. For some, it is not allowed to be used on chat apps directly.

Vibecoding: For a while I wanted to test vibecoding and do projects without any “old school” manual coding intervention. My obsession with how the codeflow should look always prevented me from doing so. Because this project is personal enough and not important, it is a good time to try vibecoding now! I am an avid user of Cursor agents and Claude Code in all my projects but I want a complete hands-off experience for this project. I only used Claude Code for this project and there is no single interaction I made to the code base!

ProxAI: I made a new open source python library ProxAI for abstracting out AI providers from code and wanted to use my library as the backbone of this project. Recently, I have been testing my library on different environments and use cases like colab+research, python+batch processing/analyses etc. it is good to test on a simple python server. Also, I wanted to test Claude Code capabilities to understand my library from documentation and implement it.

Maximum one day project: Yes, I didn’t want to invest more than one afternoon because I didn’t know how useful the eventual outcome would be to my personal productivity. Because of the points I made so far, I felt it is worth investing this project in hackathon style. Spoiler! It turned out really well and for most of my queries (more than 80% so far), I am only using this local website! More on that later.

The Experiment: Building an App with 100% “Vibecoding”

New directory, new repo, and Claude Code . What do I need more?

Before starting to explain technical details of the process, it is worth sharing my opinions and prejudice about vibe coding I have. Even after this project which was made entirely on Claude Code without single direct code intervention, my opinions have not changed too much honestly.

Opinion: Cursor Agents and Claude Code are a huge part of my life right now on any kind of projects in addition to regular help from all kinds of AI chat models. Right now, I have intuition on which tasks they can handle and where I should intervene or completely take control from the start. It is hard to explain intuitions due to the fuzzy cloudy nature of it but I will try to clean the air and get calmness after the rain. Hope some plants can grow!

They are good at, uh, almost everything! Almost. They are really good at writing unit tests, basic styling of the website, figuring out the bugs and so on and so forth. When the code base gets larger and larger, they are starting to fail on some tasks. I think in the near future this will also change and they will get better but for now, I encountered the following in my professional life and on big codebases:

-

Niche bugs: For some bugs, agents struggled a lot even though I directed very cleanly where the bugs could be. Mostly, the bugs are very niche in my case and I think in the near future this will also change and they will get better.

-

Rewriting: It rewrites existing functions even though there are some alternatives in my local libraries inside the project. Sometimes, directly using these functions/methods are sufficient and sometimes minor updates are required to these functions. Good thing is they are doing correctly when I mention “you forget to use this function” or “don’t rewrite this” etc. I am updating claude.md regularly but still these are not helpful.

-

Refactoring: Lots of people are mentioning that online: codebase can get out of control very easily. To avoid that, I am trying to control the structure very tightly and try to intervene via refactoring some parts when I sense it is going in a chaotic direction. It is surprising for me how bad these agents are for refactoring even with very clean instructions. I am tuning my instructions a lot but still couldn’t figure out how to do that correctly. Most of the time, I am not happy with the outcome of the refactoring and don’t feel complexity reduced when compared with human engineer refactoring.

-

Software design: I don’t want to mention this too long because it is well discussed. My two cents is if you are an experienced software engineer, it means that you have a somewhat gut feeling about how the entire architecture will evolve in time according to new requirements. You need to make decisions which can impact the entire code base much later, sometimes months. That wisdom doesn’t come to AI agents automatically and it is very hard to make them decide on the correct thing even with lots of instructions, guiding, and explaining the potential issues. Jeff Bezos said the first visible results of a CEO’s daily decision comes after two years. If something is wrong today, it is because of some mistake made two years ago. It might not be that drastic for software development but still you can easily be happy or regret the decision you made 4-5 months ago when designing the architecture.

Most of these points apply to big code bases but even for this small multi model AI chat project, done in a single afternoon, I encountered some of these issues. Let’s deep dive into that.

Claude Code Details

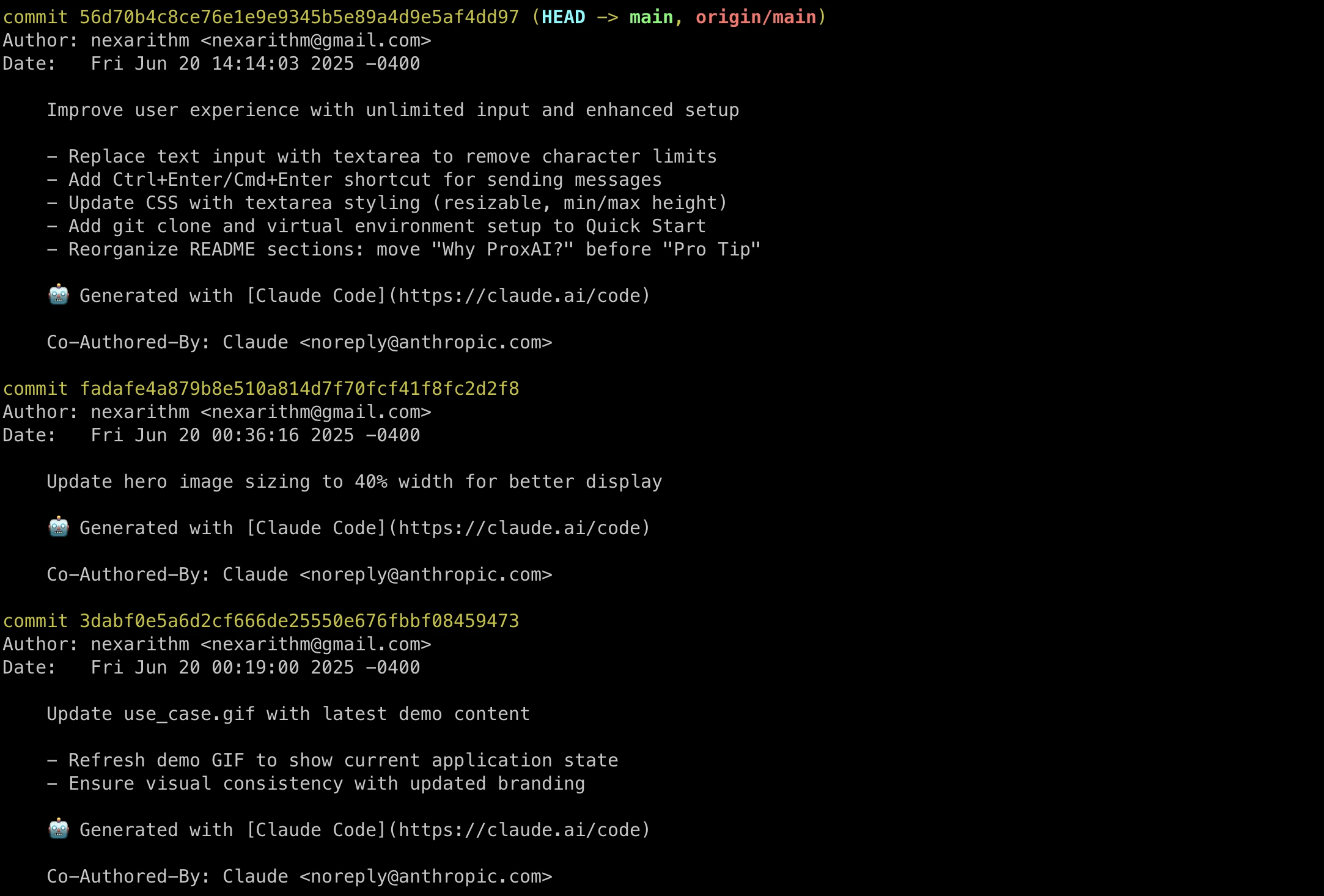

Simple stats: I had no idea how long it would take to implement this multi model AI chat bot, how many commits would be required, or even possibly get a meaningful end result.

- It took 5 hours to build a working, satisfactory result.

- A little bit more than 20 commits to make it ready and shareable.

- Claude Code API cost: $11.20 (2622 lines added, 675 lines removed)

ProxAI documentation via Claude Code: Because my ProxAI library is pretty new and not adopted by any users yet, I was curious how the Claude Code handles the details of it. In short, ProxAI is a library that tries to unify AI provider API calls that lets users use built-in cache, switch models, dashboard for tracking metrics etc. I provided a direct link to my documentation page as https://www.proxai.co/proxai-docs and asked Claude to download the content and make all provider calls using ProxAI. This approach worked quite well and Claude Code had a real good understanding of my library.

Ah, refactoring moments: After some progress, my spidey senses started to tickle and my inner voice said very silently but effectively “Are you sure it is in the correct direction?” So, I decided to do some refactoring before continuing to implement more features. I hit the same issue in my other projects. After lots of rewriting the specifications, limiting the parts Claude Code needs to focus on, starting from scratch and asking in different ways, I ended up with somewhat more meaningful functions.

End result is still not at human coherence level but good enough to make Claude Code life easy in future. This is a very strange thing to me because when I am reading code bases in my professional life, I can easily see the poetry in the architecture and design. I encounter lots of “Aha!” moments and admire the genius of the writer. It is not in a way that software engineers are doing some simple stuff in a complex way because of their ego. Well written code implies lots of things: easy to read and understand by other software engineers, flexible enough to make modifications in future if needed, beauty in flow. I remember Magnus Carlsen saying computer chess engines are playing like stupid chess players but it always wins humans. I feel similar about the code produced after refactoring. It somehow delivers but the planning part is not easy to digest for humans. Soon, we might not need to investigate the code and make modifications for ourselves and AI code manipulations are the only way to write production level quality work. But for now, we are not at that level, so we need to intervene in these code bases eventually because of some bugs or new huge feature requirements.

By the way, the feature that we are not writing codes anymore can be very close to us. I am only talking about the current status quo. We can think of deep natural nets already like that, no one writes kernels by hand any more. The only meaningful way we can manipulate the deep neural nets is by data, objective function, optimizer manipulations. Soon, the code bases can end up with these phenomena and the only meaningful/effective/positive way can be only AI agents that work for us. The difference between neural nets and code bases is we are already very familiar with lots of programming practices and can understand a huge variety of code but we lost the battles in kernels very fast. Three by three matrices that detect vertical or horizontal lines were simple to understand just looking directly at the matrix but after that, we lost. In programming we still have an opportunity to direct these agents to write code that is understandable by humans and somehow get the poetry/spirit of the code. These agents are already training on the artifacts that we created for ourselves to understand. This is a huge advantage. For Alexnet, we were trying to achieve the goal with tools that we never understood truly or didn’t have enough mathematical tools to create meaningful components.

Tuning Combiner Prompt: The combiner model’s objective is getting 10+ model answers and creating the common consensus part and the summary of unique contribution points of each model. It took maybe 6-7 iterations with Claude Code to have a good prompt for this task. I think if I did this manually, it would be a little bit easier to achieve what I want. But this is not an issue. Final system instruction and prompt are as good as I thought and I didn’t break the

Image Generation: I generated some images for the readme.md file from Gemini and OpenAI and added these manually to my code base. I asked Claude Code where I should put these images. Check the final readme file: https://github.com/Nexarithm/multi_model_chat

Final Product

I started this to test hands-off vibe coding experience and to see how Claude Code works with my ProxAI library. I didn’t plan to use the outcome of this afternoon hackathon at all. The implementation experience was important for me to figure out user experience. But, oh man. I started to use this localhost website as my starting point for my daily queries. I am not even asking other chat models on different tabs, just starting to ask this tab and if the result is not promising, moving to other chat tabs then. The product feels like an MVP and delivers the most basic thing that I am looking for. I am planning to make more features for myself as follows and not more than these probably to make it more robust to my personal usage.

- Chat history: There is no saving system for chat history right now. Simple file writing should work.

- Chat summary: I like current chat web apps 2-3 words summary of the conversations, I will add this to my project also. Very easy with ProxAI.

- Changing the query type: I want to have a little bit more options than only creating consensus of the models. Not every task falls into this category in my personal prompts. Adding a voting system can be also useful.

- Changing active models during the chat: Right now, it is only possible to start with a fixed set of models and go further with these. I want to have an option to dynamically change the model list.

- Changing Max Token Counts: I realized this is an important feature and needs to be controlled. It should be adjustable for regular model queries and combiner model query separately. Because I am making 10+ model queries at the same time, the query price is always getting much higher than asking one model as a regular user. I am limiting max token to 5k but for some tasks I needed to increase that and did this manually on code. Having an option on the web UI makes sense to me.

Please test it by yourself to see if it is useful for you also. Feel free to make any PRs to the repo or any feature requests other than these next steps. Bonus point, you can use the ProxDash API key to track all metrics, logs, and expenses of each model if you want to stay in control of that. I am regularly checking how much I spent to see if it is worth using. There are also some privacy options on ProxAI and ProxDash to use it more securely if you are interested in.

What I Learned: The Surprising Power of Personal AI Tools

Personal Websites: The first thing I realized that is kind of surprising for me is we are living in a time where making personal needs websites is very easy. I felt very 2000s. Dummy design is good enough for me, works on localhost, and solves my problem. I didn’t need to deal with any CSS, any javascript effects, or any boring parts. I know that if I want to push that to some server and host that(for my usage only), it will add lots of headaches as Andrej Karpathy recently mentioned. We will go there eventually but now there is no value for me to host on a different computer. If it was before, I think this project is not worth trying because there is a huge chance that it will take more than 5 hours or one evening. I will probably go with just the shell UI option but a personal crafted website is more pleasant to use and I don’t want to change my daily practices drastically on AI chat web apps. I convinced myself, it is worth doing more projects like this one to increase my productivity. 5 hours work + $11.20 Claude Code bill.

Vibecoding: Sounds good, doesn’t work with a catch: “in large projects”. Even with this really simple project, things get out of control a couple of different times and I need to intervene with refactorings. I highly recommend checking the “Ah, refactoring moments” section if you directly jump to this section.